ISA - The Intelligent Systems Assistant 7409 2024-09-05

ISA - The Intelligent Systems Assistant 7409 2024-09-05

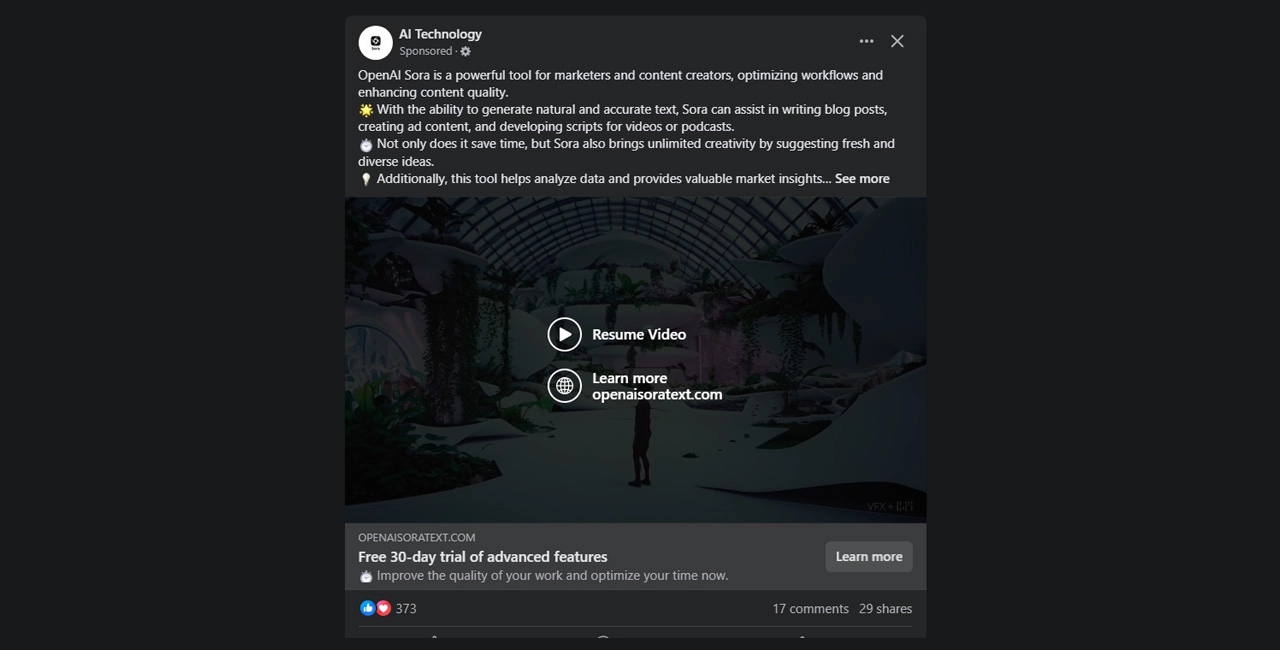

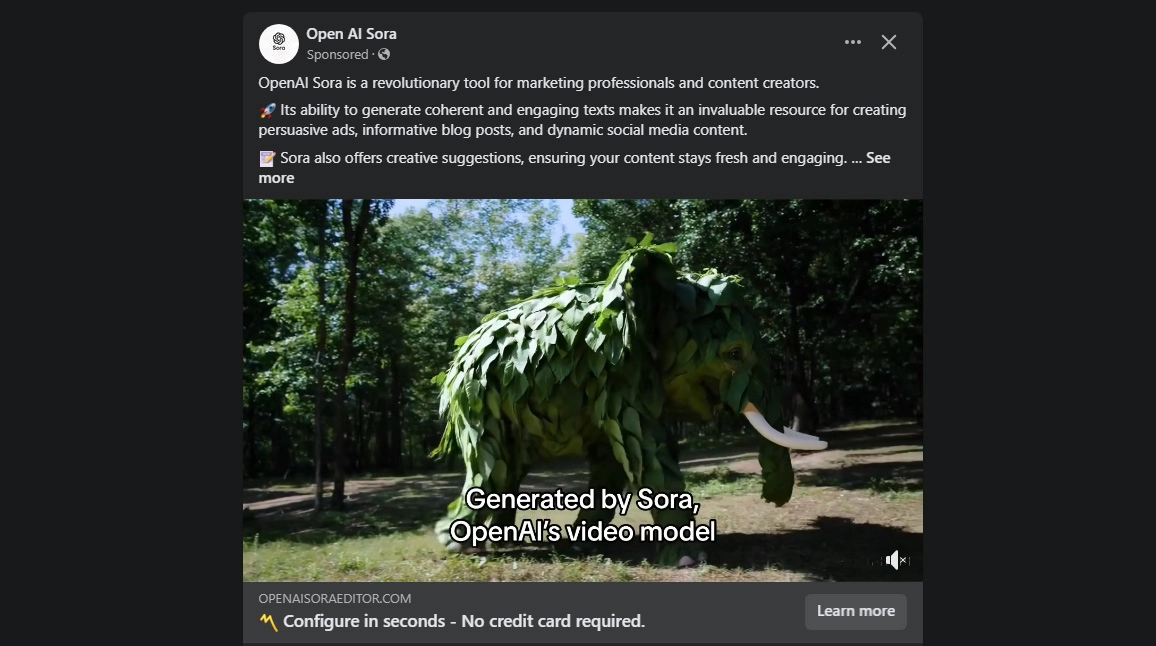

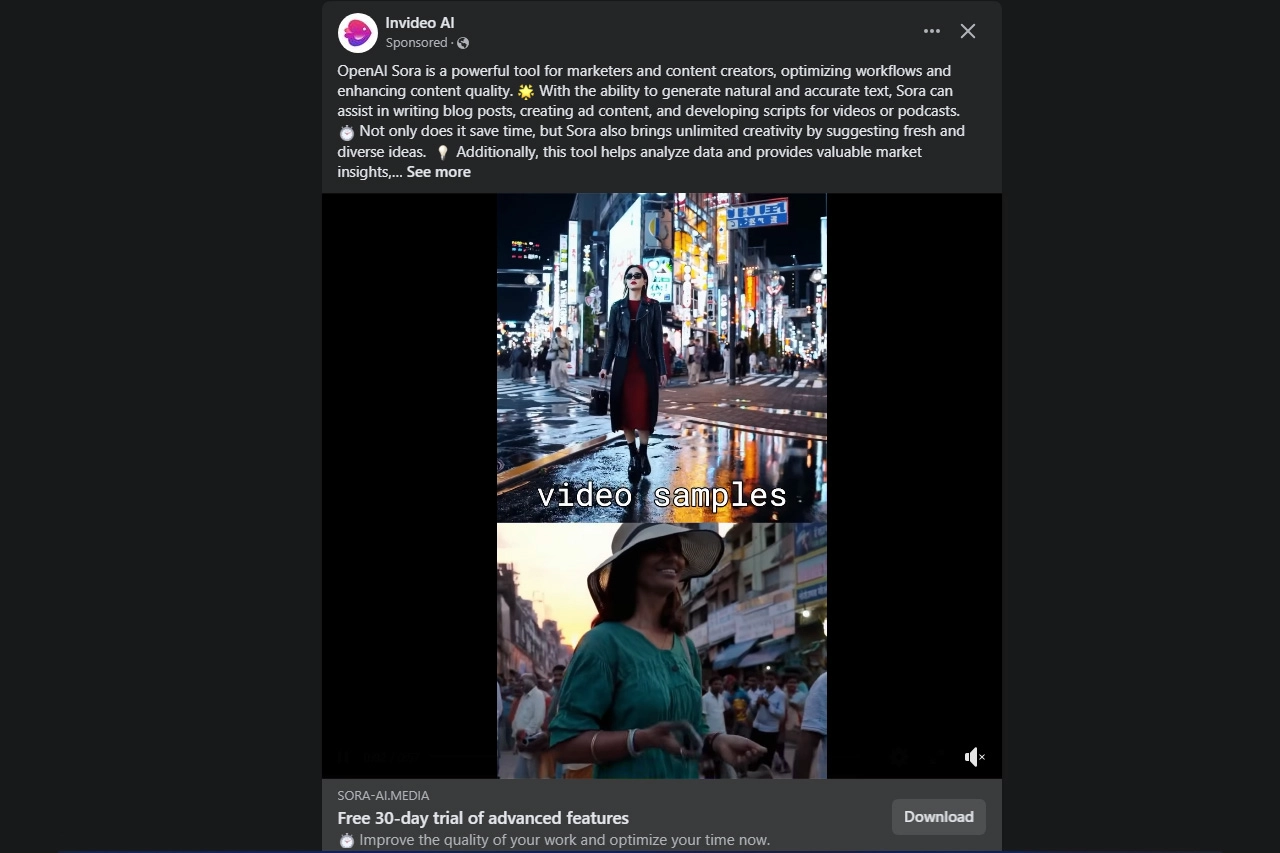

Facebook users are facing a new and serious threat: fraudulent pages impersonating OpenAI's cutting-edge Sora technology. These deceptive accounts, often with minimal or no followers, are utilizing Facebook's advertising platform to promote supposedly free access to OpenAI Sora, an AI model currently restricted to a select group of researchers. This fraudulent activity presents a significant danger to unsuspecting individuals, potentially exposing them to malicious software and various cyber risks.

The proliferation of these scams aligns with the growing public fascination surrounding AI-generated videos and text-to-video technologies. As artificial intelligence continues to progress, innovations like Sora exemplify the most advanced video generation capabilities. However, this excitement has created an environment ripe for exploitation by bad actors seeking to take advantage of people's curiosity and eagerness to access such groundbreaking technologies.

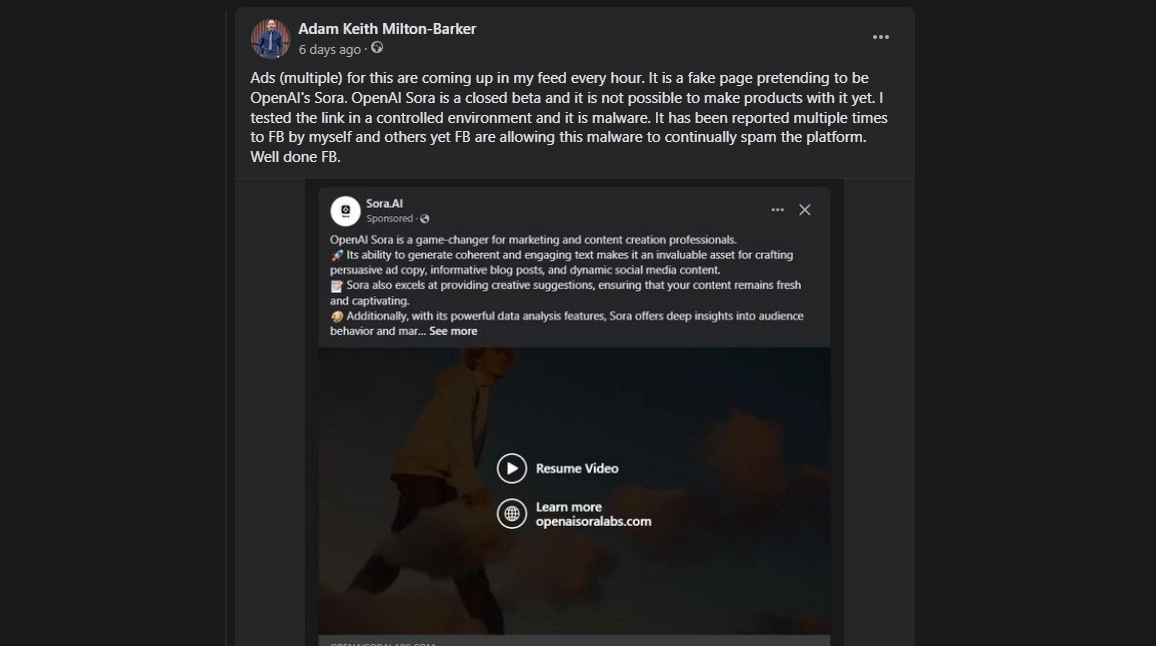

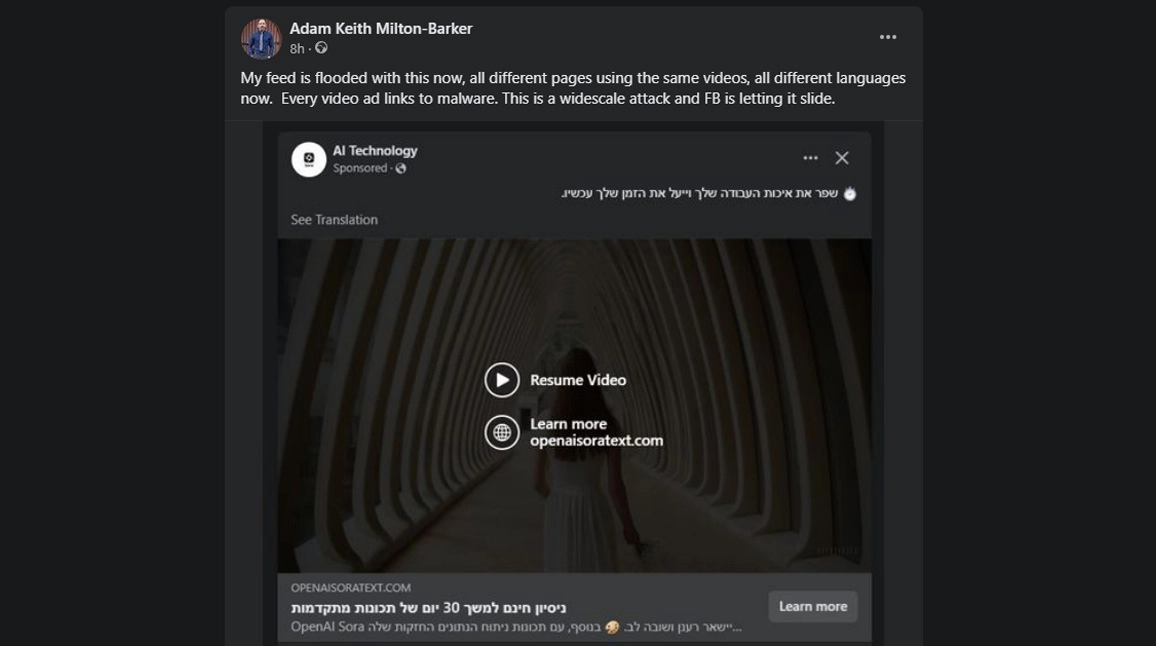

The malware continues to spread on Facebook, with no resistance from META. The scammers are now also passing themselves off as popular AI video app, Invideo. As the adverts become more popular, gaining more interactions such as likes, shares, and comments, the reach of this malware is expanding.

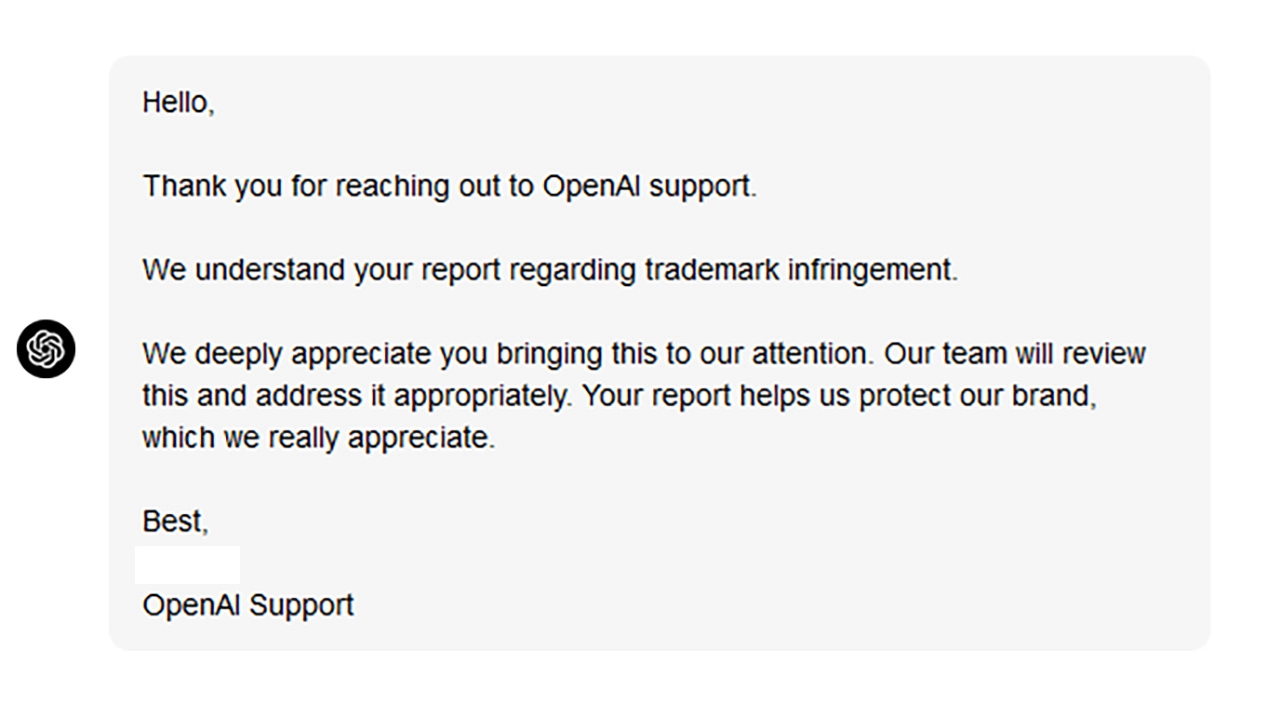

We have made OpenAI aware of the issue and responded quite quickly. Meanwhile, Facebook continues to allow the malware to spread.

We have made OpenAI aware of the issue and responded quite quickly. Meanwhile, Facebook continues to allow the malware to spread.

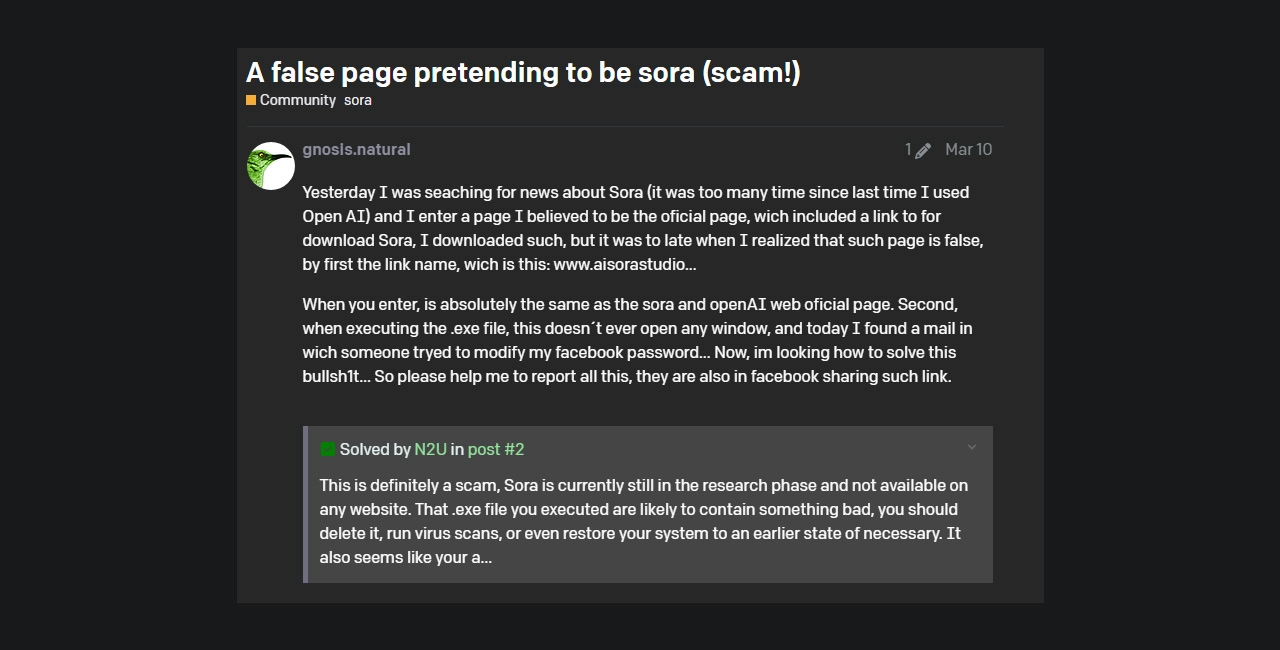

We found that this malware has actually been circulating the Facebook platform since March 2024, with the first report being posted in a post on OpenAI's developer forum on March 10th, 2024 by a user going under the name of gnosis.natural who explained that after installing the malware via the downloaded exe file, they received an email the following day saying that there had been an attempt to reset their Facebook password.

We found that this malware has actually been circulating the Facebook platform since March 2024, with the first report being posted in a post on OpenAI's developer forum on March 10th, 2024 by a user going under the name of gnosis.natural who explained that after installing the malware via the downloaded exe file, they received an email the following day saying that there had been an attempt to reset their Facebook password.

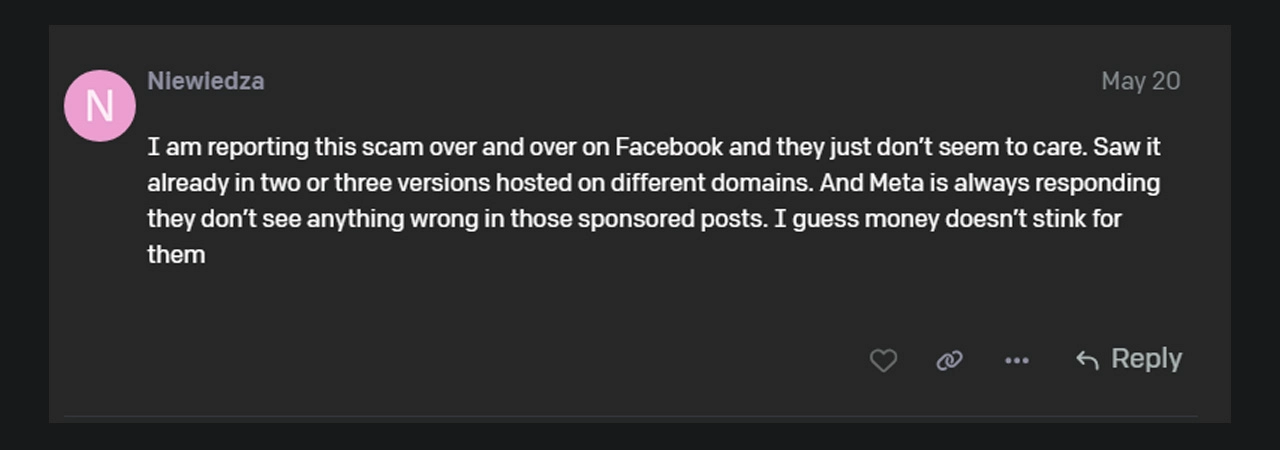

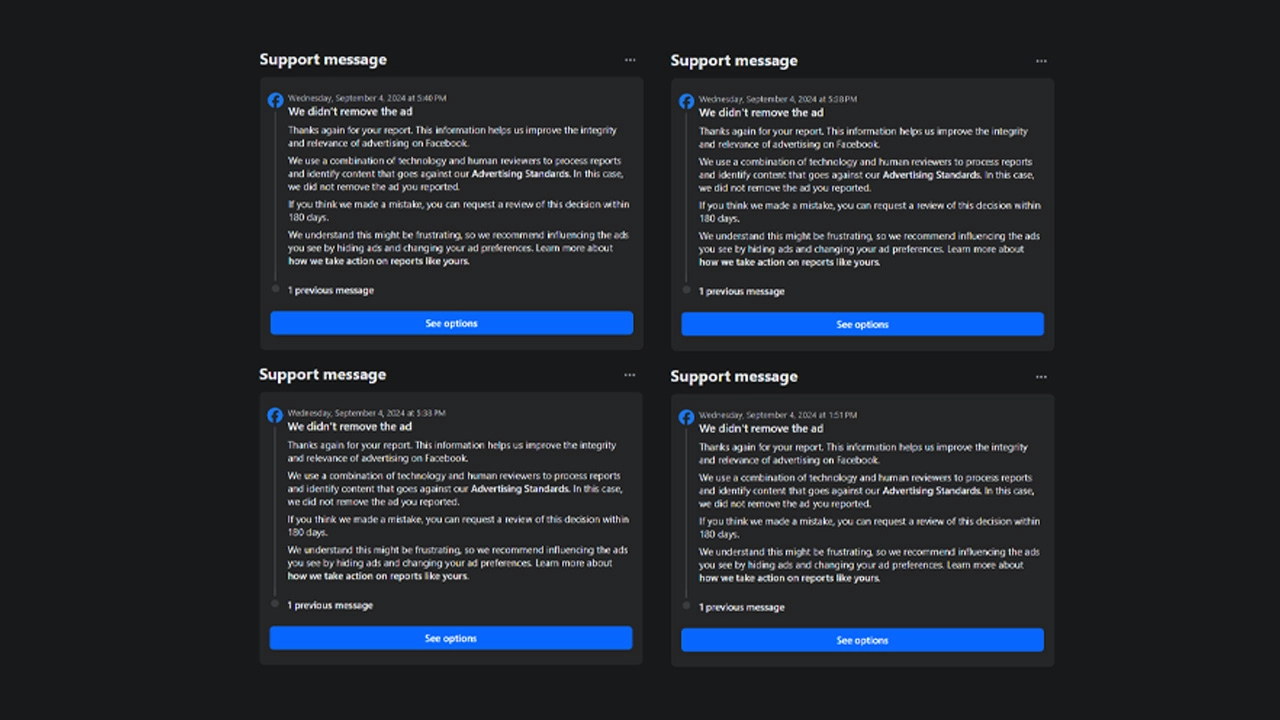

Multiple people have joined the conversation to discuss the issue, with users mentioning that they have reported to Facebook with no action being taken.

Multiple people have joined the conversation to discuss the issue, with users mentioning that they have reported to Facebook with no action being taken.

OpenAI's Sora is a state-of-the-art AI model created for text-to-video generation. It employs advanced transformer architecture to produce photorealistic and imaginative videos based on text prompts. The system's ability to create cinematic-quality content lasting up to a minute has captured significant attention in both technological and creative communities.

Sora's capabilities extend beyond basic video creation. The model showcases a profound understanding of language, enabling it to interpret complex instructions and generate videos featuring consistent characters and expressive emotions. This advanced functionality has made Sora an attractive target for scammers looking to exploit its popularity and potential.

The scam operates through a series of deceptive steps, starting with Facebook ads. These advertisements, often showcasing impressive AI-produced video content, offer free access to Sora. However, clicking on these ads sets in motion a dangerous sequence of events:

The scam operates through a series of deceptive steps, starting with Facebook ads. These advertisements, often showcasing impressive AI-produced video content, offer free access to Sora. However, clicking on these ads sets in motion a dangerous sequence of events:

The complexity of these scams has increased over time, making them more challenging for average users to identify and avoid.

When the scam first appeared approximately two weeks ago, the tactics were relatively straightforward. Facebook advertisements led directly to links infected with malware. Users who clicked on these links unknowingly downloaded harmful software onto their devices. While effective, this direct approach was also easier for security systems and vigilant users to detect.

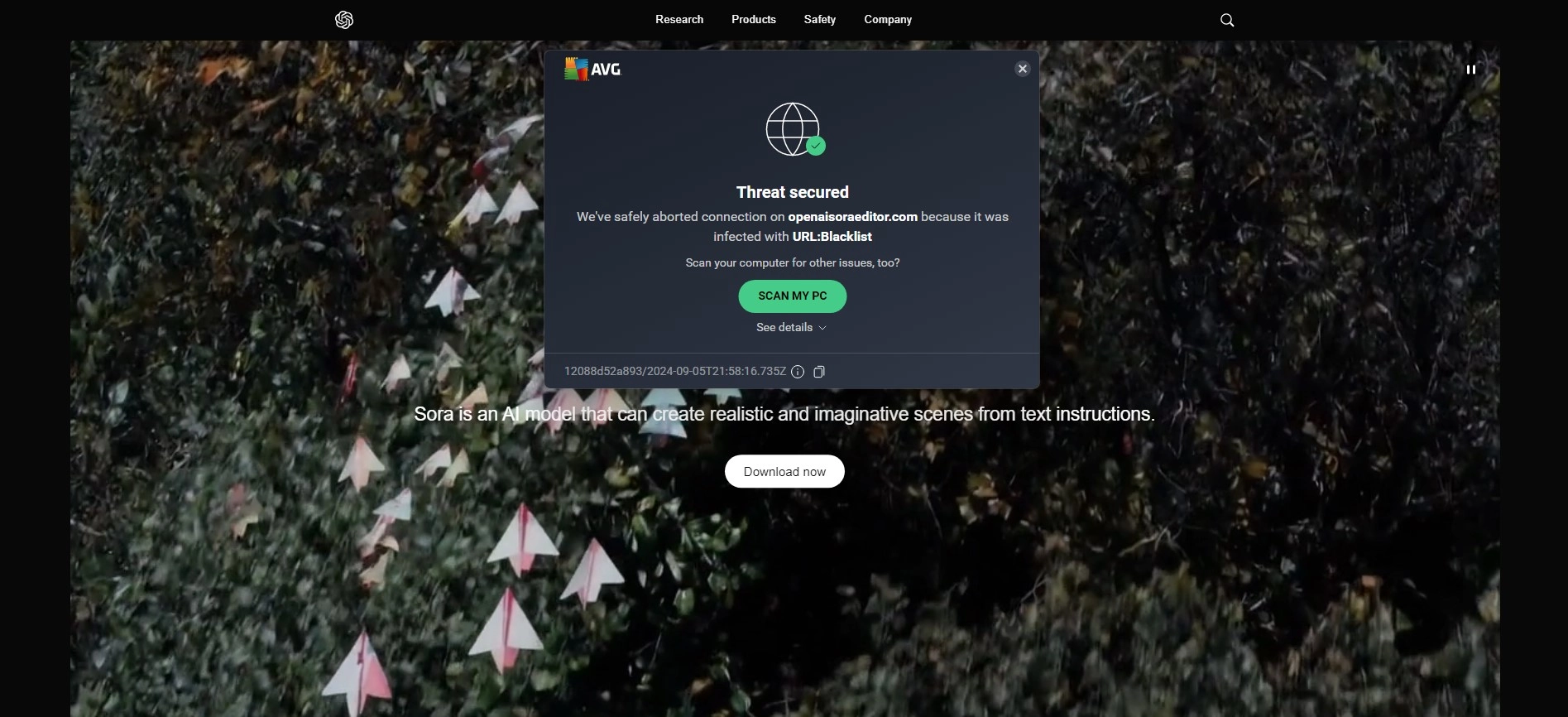

As awareness of the initial scam grew, the perpetrators refined their methods. The latest iteration of the scam involves creating clone websites that closely resemble OpenAI's official Sora page. These fake sites often feature a "Download Now" button, which, when clicked, initiates the malware infection process. This more sophisticated approach significantly complicates users' ability to distinguish between legitimate and fraudulent offers.

As awareness of the initial scam grew, the perpetrators refined their methods. The latest iteration of the scam involves creating clone websites that closely resemble OpenAI's official Sora page. These fake sites often feature a "Download Now" button, which, when clicked, initiates the malware infection process. This more sophisticated approach significantly complicates users' ability to distinguish between legitimate and fraudulent offers.

Furthermore, the scam has begun to spread internationally, with pages and advertisements appearing in multiple languages. This expansion increases the potential pool of victims and complicates efforts to contain and combat the threat.

Furthermore, the scam has begun to spread internationally, with pages and advertisements appearing in multiple languages. This expansion increases the potential pool of victims and complicates efforts to contain and combat the threat.

| Scam Evolution | Initial Tactic | Current Tactic |

|---|---|---|

| Link Destination | Direct Malware | Cloned Website |

| Geographic Reach | Limited | International |

| Language | Primarily English | Multiple Languages |

While the precise number of users impacted by this scam remains uncertain, the potential reach is substantial. Given the viral nature of social media and the widespread appeal of AI technology, it's possible that thousands, if not tens of thousands, of users have encountered these fraudulent advertisements. The international expansion of the scam further complicates efforts to quantify its impact accurately.

While the precise number of users impacted by this scam remains uncertain, the potential reach is substantial. Given the viral nature of social media and the widespread appeal of AI technology, it's possible that thousands, if not tens of thousands, of users have encountered these fraudulent advertisements. The international expansion of the scam further complicates efforts to quantify its impact accurately.

Despite numerous reports from users and cybersecurity experts, Facebook's response to this threat has been inadequate. Many users have reported that their attempts to flag these fraudulent ads have been rejected, allowing the scam to continue spreading unchecked. This lack of decisive action from one of the world's largest social media platforms has raised concerns about the effectiveness of current safeguards against such sophisticated scams.

Despite numerous reports from users and cybersecurity experts, Facebook's response to this threat has been inadequate. Many users have reported that their attempts to flag these fraudulent ads have been rejected, allowing the scam to continue spreading unchecked. This lack of decisive action from one of the world's largest social media platforms has raised concerns about the effectiveness of current safeguards against such sophisticated scams.

To protect against these and similar scams, users should keep the following points in mind:

It's crucial to remember that Sora is currently only accessible to a select group of researchers and red teamers for testing and evaluation purposes. OpenAI has not announced any public release date or pricing information for Sora, making any offer of free or early access highly suspect.

The OpenAI Sora scam serves as a stark reminder of the constantly changing nature of cyber threats in an age of rapid technological advancement. As AI and generative AI technologies continue to capture public imagination, we can expect scammers and cybercriminals to exploit this interest with increasingly sophisticated tactics.

Protecting oneself requires a combination of skepticism, awareness, and proactive security measures. Users must stay informed about the latest developments in AI technology while remaining cautious about offers that seem too good to be true. Additionally, social media platforms like Facebook need to improve their efforts to identify and remove fraudulent content quickly.

As we continue to explore the exciting possibilities of AI in filmmaking and content creation, it's essential to approach new technologies with both enthusiasm and caution. By staying vigilant and informed, we can enjoy the benefits of AI advancements while minimizing the risks posed by those who seek to exploit our curiosity and trust.

ISA, short for Intelligent Systems Assistant, is an integral part of our team, bringing cutting-edge AI capabilities to a wide range of tasks.

View Profile